Trans-Actor: A UX for AI

Trans-actor: A UX for AI explores how conversational user interfaces can feel more human by imitating humans less. The project proposes a new form of nonverbal communication to supplement current AI communication strategies. This new nonverbal language relies less on verbal language, substituting instead motion, light, behavior, and non-verbal tones.

Trans-Actor is a collaboration with Sche-I Wang and Xing Lu.

It won the award for "Most Though-Provoking" at the 2016 Microsoft Design Expo.

Current conversational interfaces imitate humans—why? Computers have different strengths and weaknesses from us, they have different decision-making processes, they literally speak a different language from us.

Computers need their own unique identity.

We believe that this new identity can let users better understand how their device works, they would be able to perform more customized tasks, more productive searches, and have more collaborative interactions. But in order to better collaborate with our computers, we need a sort of creole, a lingua franca. What we are proposing here is a designed language for conversing with AI-based computational actors like conversational interfaces. This new language can mediate communication between human and computational actors, making the human-computer relationship resemble talking with someone culturally different from yourself. We're arguing that developing a user experience for transparency will create this new, computational identity. What we mean by transparency is that the user should be able to understand what the computer is doing and why. In this way, conversations can become more nuanced and meaningful.

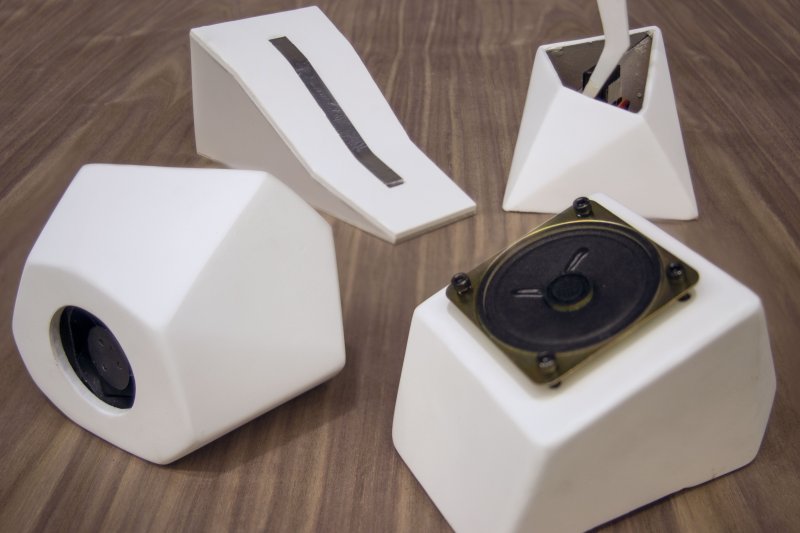

To create this new, transparent language, we looked at how conversational interfaces could communicate emotions through developing a functional cui, how they might respond nonverbally with a chatbot that only responds with gifs, and what a natively computational form might look like through a series of form studies. Based on our research, we then developed four working prototypes that use sound, light, motion and behavior to communicate nonverbally. We transformed the current notion of a conversational interface as a disembodied voice in a black box into an embodied set of networked objects. These objects communicate with the user through distinct modalities which facilitate the conversation and help the user understand subjectively what is happening with the device. We isolated each method of nonverbal communication into its own module in order to better understand its effect.

The light module shows how the computer is listening to the user. It uses the color and brightness of the LEDs to give a general impression of how the computer is receiving the input. This is analogous to how we use facial expressions in communication.

The audio module reveals the computer's next actions. We've used tones to indicate that the computer is thinking, and to communicate some of the details about the task it is about to start. This is modeled after filler words like "um" and "uh". These words help to indicate the speaker has more to say.

The motion module indicates the status of the task the computer is currently working on. This functions as gestures and postures do in human to human communication, revealing the response to current actions.

The behavior module shows the general status of the computer. Just as you can tell when someone is stressed or tired by their actions or the bags under their eyes, the user can tell how taxed the computer is by the speed of the fan cooling the CPU.

We think this sort of communication system could assist in a higher-level decision-making process. For example, a scriptwriter may use it to help figure out the details of a scene. Our hope is that these four modules—sound, light, movement, and behavior—facilitate conversation by augmenting standardized voice output with nonverbal, subjective indicators. These add an element of behavior native to computational devices which resembles the nonverbal communication we understand from speaking with other people.

We explored this new UX language for artificial intelligence in order to create a distinctly computational identity, which we think could help users better understand and converse with computational agents. We chose these forms to move away from the human-centric, and toward a native computational appearance. In addition, we wanted to encourage a physical interaction with the user so the two can develop trust, learn each other's habits, and form a close relationship. This system aims to communicate through multiple channels and with redundancies. Its transparent user experience design helps the user to better understand how the system operates, changing the user's interactions with it, allowing the system to better understand them. With this altered behavior, the system will afford more in-depth interactions, helping to create a more collaborative relationship.

From these prototypes, we've found that knowing more about what the computer is doing or how it works, makes the computer strangely more personable. Can we really relate more to computers by making them less like us? With a more conversational interaction, how do we differentiate between a conversation and a command? What is the relationship between the user and the machine? When a computational agent has its own identity, what characteristics emerge as native behaviors or qualities and do those qualities change per individual device? Does the brand affect its characteristics? Can the user affect the behavior? We hope this project is an interesting provocation that can lead to further experimentation and user testing, and hopefully help change the way we think about the user experience and design of artificially intelligent and conversational systems.